Data & API Recipes

This page provides a growing list of examples for how to use Open Context data and APIs in specific tasks and workflows.

For a more detailed technical discussion of the Open Context API, please review Open Context's Web Services (APIs) documentation.

We also developed some example Python code that makes requests to Open Context's query interface to generate tabular data that can be analyzed and visualized and saved as a CSV file. The code for making requests to Open Context's APIs can be found at this link. This code for interacting with Open Context's API powers a Jupyter Notebook visualization of zooarchaeological measurement data that you are welcome to review and adapt.

Getting Search Results as GIS (GeoJSON) or CSV Formats

Open Context has powerful search functions to browse and filter data records created by many different contributors and projects. You can use Open Context's Web interface to explore these data, find records relevant to your interests, and then download to your own desktop.

Downloading Search Results (CSV or GeoJSON)

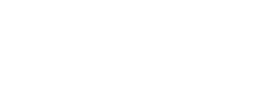

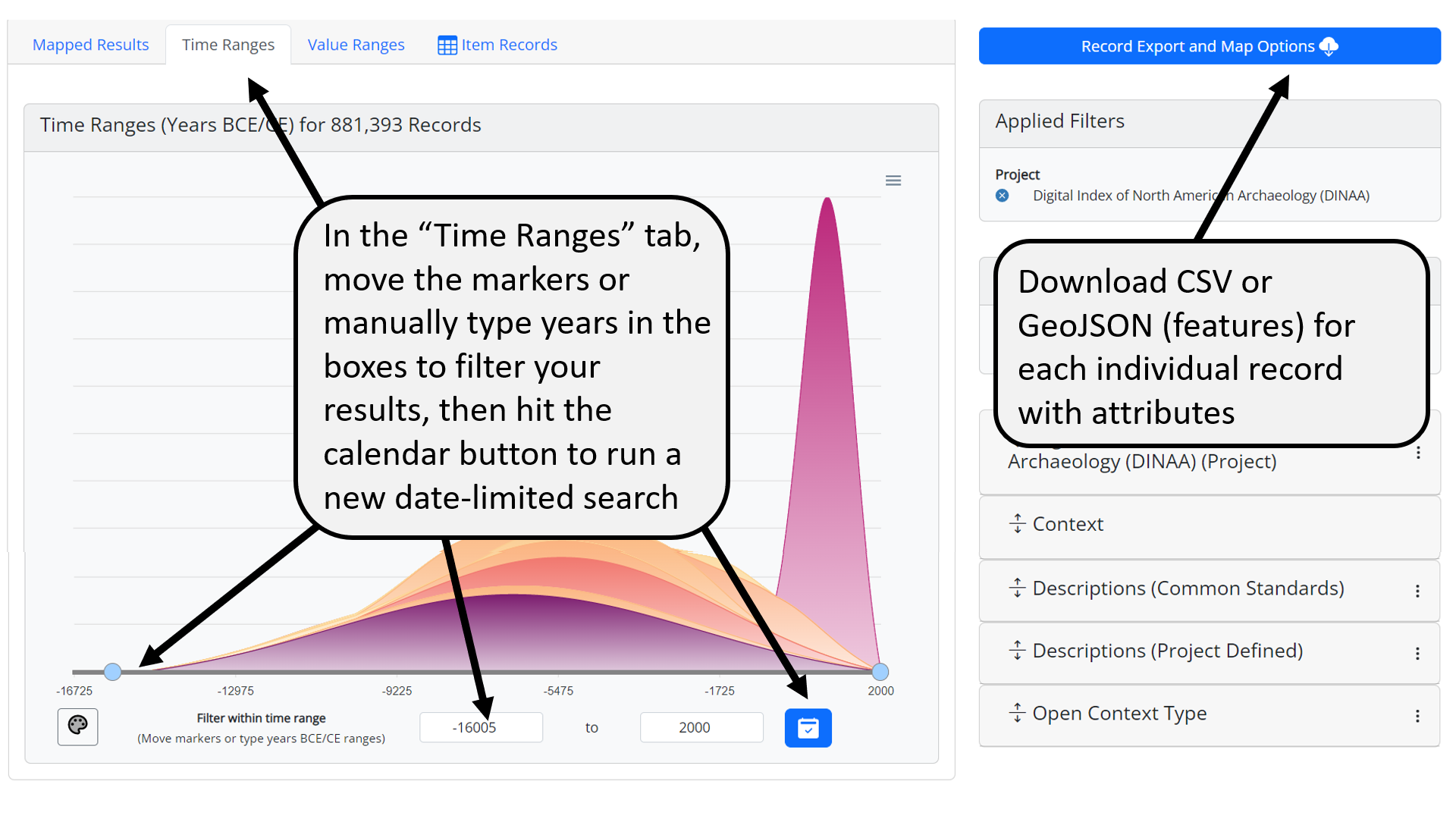

To download data, start by searching and filtering through the data records published by Open Context at this URL: https://opencontext.org/query/. If you want to download GIS or CSV data from the Digital Index of North American Archaeology (DINAA) project, you can select DINAA as a filter in the "Projects" filter option, and arrive at this URL: https://opencontext.org/query/?proj=52-digital-index-of-north-american-archaeology-dinaa. This URL shows a map of DINAA's current coverage and chart summarizing the distribution of records through time. Use the "Filter Options" on the right to filter based on different attributes in the data. Once you have filtered the data to select records relevant to your interests, use one of the download options discussed below.

-

Summarized Data in Geospatial Tiles (GeoJSON format)

The fastest way to get data aggregated as counts of records in different square regions. The API documentation describes how you can get "geo-facets" (the summary of counts in square regions). In the case of DINAA, Open Context limits tile resolution to roughly 17km in order to protect site security. Other projects may have much more high resolution geospatial data.

Example Summary Geotiles -

Individual Record Data (CSV or GeoJSON formats)

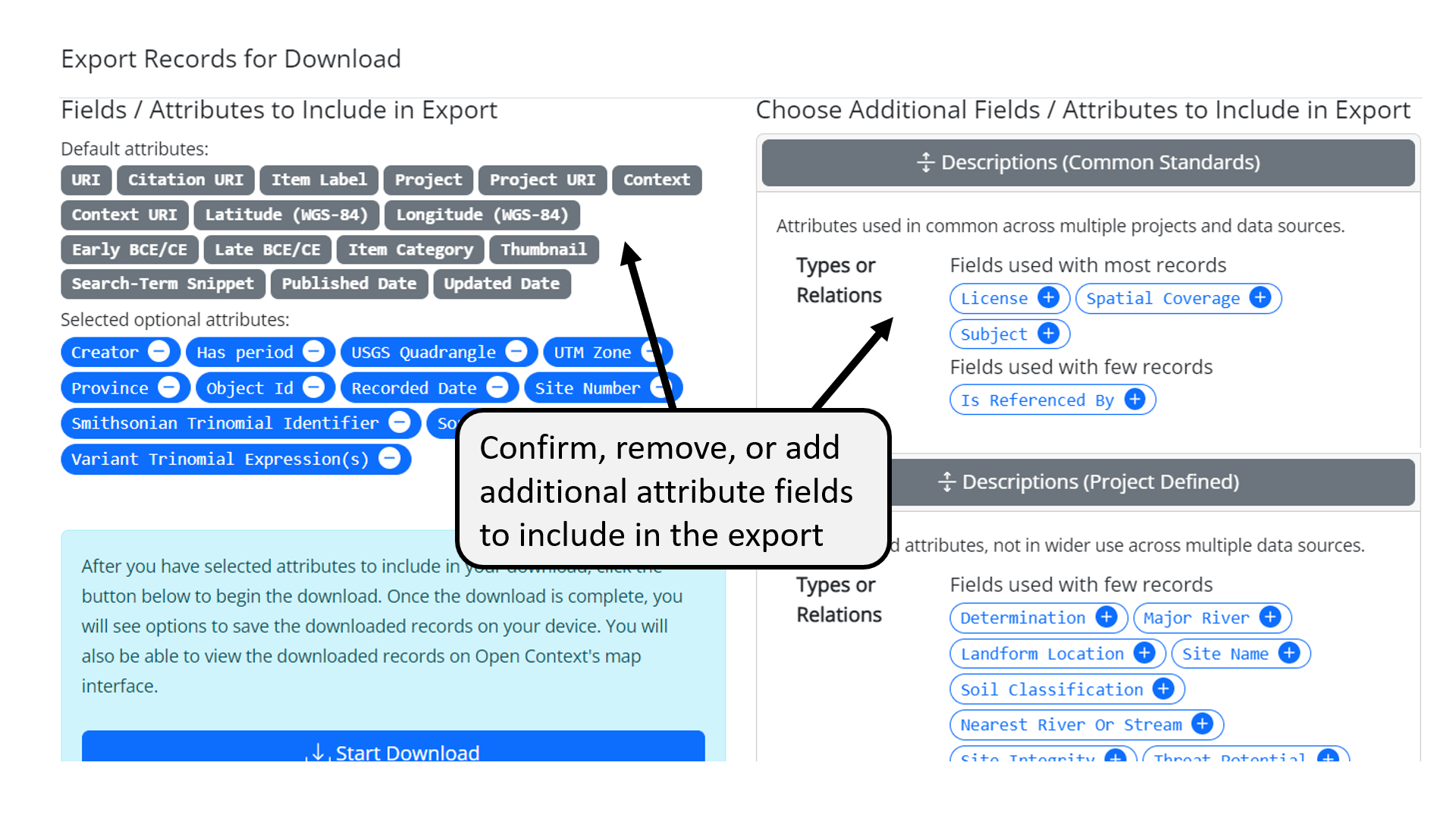

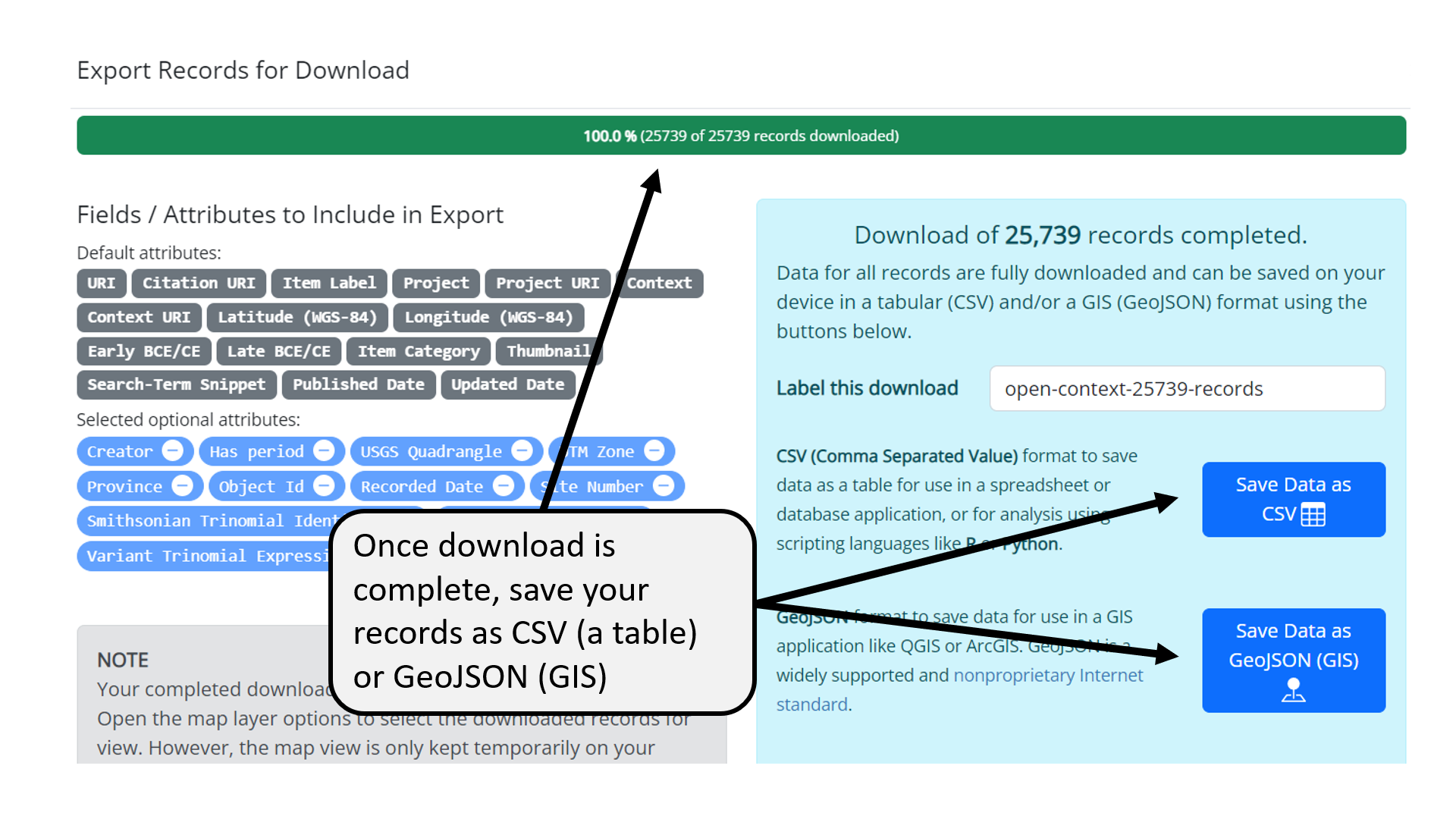

Getting individual data records is slower but offers more analytic flexibility and richer attribute information. The cloud-download button on the right of the search results table opens a dialog box to get CSV or GeoJSON data for each record in your search query results. To protect site security for the DINAA project, point locations come from the centers of ~17km tile regions. In other cases, location information may be more precise.

1: Individual Record Download Button

2: Individual Record Download Dialog

3: Individual Record Download CSV or GeoJSON Save Options -

After Download: Loading GeoJSON into QGIS or ESRI ARCGIS

You can use Excel or similar spreadsheet software, relational databases, GIS, or many other software applications to work with the CSV exports from Open Context. Because GeoJSON is a popular open format and official Internet standard, most GIS applications can load GeoJSON exports. Here are a few tips for using Open Context's GeoJSON with common desktop GIS applications:

- Use the "Add Vector Layer" command, choose GeoJSON format.

- GeoJSON can be converted to other vector formats e.g., ESRI Shapefile, KML, SpatiaLite, and many others. To save the layer another format, right-click on layer, choose "Save as…" from context menu. Or, you can select the layer and from the menu choose "Layer - Save as…"

- GeoJSON comes with attribute data that QGIS puts into an attribute table. You can access these attributes with right-click context menu, or "Layer" on menu bar. The following details some attribute data you can expect for different types of GeoJSON downloads from Open Context:

- Summarized geospatial tiles only have attributes for record counts and the chronological coverage of records contained in each given geospatial tile

- GeoJSON for individual records has many more attributes

ESRI ArcGIS

GeoJSON is an official Internet standard for geospatial data, but you may need to install the "Data Interoperability" extention to ArcGIS to directly load a GeoJSON file as a layer. If your subscription does not include this extension, you can quickly and easily convert to GeoJSON to a shapefile with QGIS (see above). You can also use a variety of other open source converters (see example). See also these help recommendations.

If you find it too difficult to use ArcGIS with an open standard like GeoJSON, then consider switching to an open source alternative like QGIS.

-

Bulk Downloads of Prepared CSV Tables

Open Context offers dynamic query and search services across many projects at once. While this offers a great deal of convenience and flexibility, it can be slow for bulk downloads of data. To support more rapid retrieval of large datasets, Open Context also makes downloadable tables available in certain projects. For example, the Zooarchaeology of Öküzini Cave project links to a variety of tables where you can rapidly download bulk datasets in the CSV format.

-

Getting More Expressive Data (GeoJSON-LD)

Remember that some data formats and software have limited capabilities for modeling data. The CSV format, though simple and easy to use in spreadsheet or relational database applications, "flattens" data into a table of rows and columns. While this works for simple data structures, it is hard to transmit nested ("tree-like") structures or even multi-valued attributes using a CSV table. Similarly, many desktop GIS systems also expect attribute data in flat table structures. Open Context data often has richer data structures that require more expressive data formats (like JSON / JSON-LD). If you are a "power user" and need to work with more richly modeled data from Open Context, you should work with Open Context's APIs (documented here).

Linking to Site File Records in the Digital Index of North American Archaeology (DINAA)

The Digital Index of North American Archaeology (DINAA) is an NSF sponsored project led by David G. Anderson and Joshua Wells that uses Open Context to publish archaeological site file records on the Web. Administrative offices from several states in the US are participating with the project.

The DINAA project has included Smithsonian Trinomials for these site file records. Since archaeologists and other cultural heritage professionals routinely use Smithsonian Trinomials, these identifiers can be powerful tools to cross-reference different data together.

Linking to DINAA with Open / Google Refine

-

Click on the column with trinomial identifier to select it. Create a column for the DINAA identifier associated with the trinomial by Add Column By Fetching URLs.... You can name the field something like 'dinaa-uri'.

-

In the expression field, write:

"https://opencontext.org/query/.json?proj=52-digital-index-of-north-american-archaeology-dinaa&response=uri-meta&trinomial=" + escape(value, "url")

The above expression will get a list of possible DINAA identifiers that match your trinomial. The vast majority of cases will return only 1 DINAA identifier / trinomial (but not always, since state site records do not necessarily have a 1 to 1 relationship with trinomial identifiers). You can set the "throttle delay" to 500 milliseconds (this delay makes pauses between requests so you don't overwhelm Open Context's server). If you have a big dataset, 1/2 second delays add up.

-

Completing the step above will populate this new column with JSON data (a "machine-readable" data format) from Open Context. You'll need to process these results further with the Edit Cells > Transform... command.

In the expression field, write:

value.parseJson()[0]["uri"]

The above expression generates a link to the Open Context page representing the mostly likely match to the trinomial ID in your dataset. Since Open Context emphasizes linked data, the link represents the primary identifier for the site. For now, this will go to the old version of Open Context (still running the legacy software / database). To see the new version of the data (more cleaned up) use, you'll need to substitute "http://opencontext.org" with "https://opencontext.org". You should also note the the result has other good data, including lat/lon coordinates, time spans, and geographic context (state, county), as well as a text snippet with that has the matching trinomial.

-

Check the results! For the most part this will work since trinomials are pretty unique and usually map clearly to a state dataset. However, there maybe some edge cases that will require human problem solving. You'll need to spot check to make sure the results make sense and reference appropriate sites.

Geospatial Utilities

Open Context has included a library called "GDAL2Tiles", from the Google Summer of Code 2007 & 2008, developed by Klokan Petr Pridal.

We are testing use of this library to help with various useful transformations of geospatial coordinates. Currently, the Open Context supports a service to convert from Web Mercator meters to WGS-84 Lat / Lon coordinates. This is described below:

Convert from Web Mercator Meters to WGS-84 Lat / Lon Coordinates

-

This example assumes the field with meters (east-west) field is called the "X" column, and the meters (north-south) field is called the "Y" column. Click on the column with the "Y" meters values to select it. Create a column for the JSON result of the coordinate transform by Add Column By Fetching URLs.... You can name the field something like 'json-transform'.

-

In the expression field, write:

"/utilities/meters-to-lat-lon?mx=" + cells["X"].value + "&my=" + value

The above expression will get a small JSON response with latitude ("lat") and longitude ("lon") equivalents for these Web Mercator meter values. You can set the "throttle delay" to as low as 333 milliseconds (this delay makes pauses between requests so Open Context won't interpret repreated requests as abuse). If you have a big dataset, 1/3 second delays add up.

-

Completing the step above will populate this new column with JSON data (a "machine-readable" data format) from Open Context. You'll need to process these results further with the Edit Cells > Transform... command.

In the expression field, write:

value.parseJson()["lon"]

The above expression extracts the longitude value from the JSON results. Similarly, you can make a new field for latitude with

In the expression field, write:

value.parseJson()["lat"]

-

Check the results! We're borrowing code here, and we don't fully understand if it has problems or limitations. We provide this service as a convenience, but highly recommend consulting with geospatial data experts before trusting these results.